Parameter Update: 2026-01

"holidays" edition

Hey! - it's been a while! While I initially meant to postpone a single post during NeurIPS, things kinda slipped out of my hands between Christmas with the family and getting sick directly after. All better now though, so let's catch up on the most important things that happened over the course of the last month (less than you'd probably think - it was the holidays after all). Before we get into it, though, I want to quickly take a look back at NeurIPS, given that this year really felt like a unique moment of the community coming together. I had a wonderful time and many good conversations, and leave California feeling incredibly refreshed and energized about what's next. That being said, if anyone was still doubting that might be a bubble in AI, I would disagree with them from the top of the literal aircraft carrier Cohere hosted their after-party from.

OpenAI

GPT-5.2

After declaring Code Red in early December, OpenAI has shot back at Google's Gemini 3 and Anthropic's Opus 4.5 launch with the GPT-5.2 series of models. Despite the incremental name change, this is quite a big upgrade and definitely a "Gemini 3" class model (i.e., step-function improvement from previous generation).

GPT-5.2 evals pic.twitter.com/hVWVkKX3mt

— OpenAI Developers (@OpenAIDevs) December 11, 2025

The most impressive gains are made on OpenAI's new GDPval, which is meant to represent performance on "well-specified knowledge work tasks". Realistically, this means the model can make much prettier PowerPoints and Excel sheets, which actually does feels like a pretty big unlock. The other big improvement is the ability to work across long contexts, including across multiple context windows via compression - we first saw OpenAI focus on this with GPT-5.1-Codex-Max and it's equally impressive here

We also got an updated set of gpt-5.1-codex series, which brings both the long intelligence and context improvements to the CLI.

gpt-image-1.5

In what appears to be another "Code Red" launch, we also got OpenAI's upgraded image model. While it is a bit faster than before and results seem substantially better, it still lacks behind Nano Banana Pro in most metrics, so this doesn't feel like the win they could really use right now. The most interesting thing here is probably Sama's announcement

For example: pic.twitter.com/qcEEjfG8g0

— Sam Altman (@sama) December 16, 2025

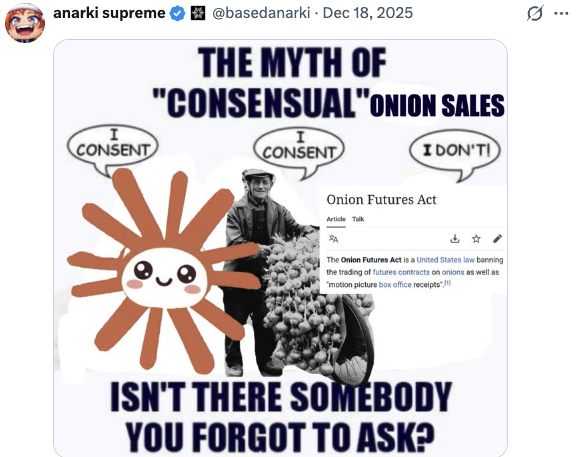

At the very least, it appears to mostly be used to generate questionable images of consenting participants at this point, which is in stark contrast to Grok, which is currently experience a "viral moment" after sad assholes realized "no content filter" means they could ask for bikini pictures of any woman (or child, in some particularly disturbing cases) on twitter. Seriously, do not look at @Grok's media tab if you wan't to keep your sanity).

Are you fucking kidding me right now pic.twitter.com/Zj89FHHzco

— pokey pup (@Whatapityonyou) January 3, 2026

NYT Lawsuit

Continuing last week's lawsuit drama, OpenAI has taken another L in early December, when the judge re-affirmed they really are expected to provide the NYT with 20 Million anonymized ChatGPT conversations. So while the judgement on the main lawsuit is still out, as a ChatGPT user, I will note - it does not feel great that my data might be handed to random NYT lawyers?

Anthropic

Buying TPUs

Following their earlier announcement of closer cooperation moving forward, Anthropic has now announced they would be buying around 1 Million "Ironwood" TPUs directly from... Broadcom? Not sure how/where Google is in this (don't they own the design?), but either way, this hopefully signals the end of Anthropics compute drought.

Skills seeing mass adoption

Just a few weeks ago I reported on Anthropic's new "Skills" standard and how, while neat, I wasn't sure how far adoption would go (to me, MCP really felt like catching lightning in a bottle). Well, turns out I appear to be fully of the mark here, with Skills seeing massive adoption in recent weeks, most notably from OpenAI which are now shipping skills inside their code interpreter sandbox (discovered, as far as I can tell, by Simon Willison).

Nvidia: Selling H200 in China

The US Commerce Department has finally lifted restrictions on Nvidia selling H200 GPUs to China. Within days, they sold 2 Million units and ran back to TSMC for more. This feels like a direct attack at the viability of China's national GPU market (which is just now starting to become competitive). Either way - not great news for memory prizes all around.

Reuters: Nvidia placed an urgent order with TSMC as demand for the H200 in China surges.

— Jukan (@jukan05) December 31, 2025

Nvidia currently has 700,000 H200 units in inventory, but China has ordered more than 2 million H200 units.$NVDA pic.twitter.com/nwcWElLi04

Google: Gemini 3 Flash

Positioned as a strong "workhorse" model, Gemini 3 Flash matches or outperforms Gemini 2.5 Pro everywhere it matters. While I still wouldn't recommend a Flash model for most people most of the time (Gemini 3 is really not that much more expensive), this is very good step toward reducing the price of intelligence across the spectrum.

Meta buys Manus

While everyone was busy enjoying the holidays/anticipating new years, Zuck was out there closing deals. On 30.12., Meta announced they would be acquiring Manus for over $2 Million. On Twitter, people were wondering about the value proposition here - Manus doesn't really have big tech IP to offer and their user growth, while impressive, can't really compete with Meta's mutli-billion userbase. Techcrunch seems to have found an angle, though: $2B isn't really that much for a company like Meta, and "For Zuckerberg, who has staked Meta’s future on AI, Manus represents something new: an AI product that’s actually making money" - lol!

Nvidia acquihires Groq

As part of a "non-exclusive licensing agreement", Groq's CEO and other senior leadership will move to Nvidia, who will also receive a license to Groq's LPU technology. While the rest of the company supposedly continues independently, it's hard not to note that "acqui-hire" playbook at play here has not usually lead to the business independently finding success after someone came and took their core talent and rights to their tech.