Parameter Update: 2025-5

"DeeperSeeker" edition

DeepSeek R1

At least for the first part of the week, R1 still seemed like the only thing anyone wanted to talk about. Dario Amodei (Anthropic CEO) has some fun/interesting takes, but somehow the whole things still manages to sound like cope to me. In personal news, I am really excited to see meinGPT offer DeepSeek R1 on GDPR-compliant EU infrastructure - without the front-loaded censorship (though it still seems to only acknowledge Taiwan some of the time). If anyone would like a spin, feel free to reach out!

OpenAI: o3-mini

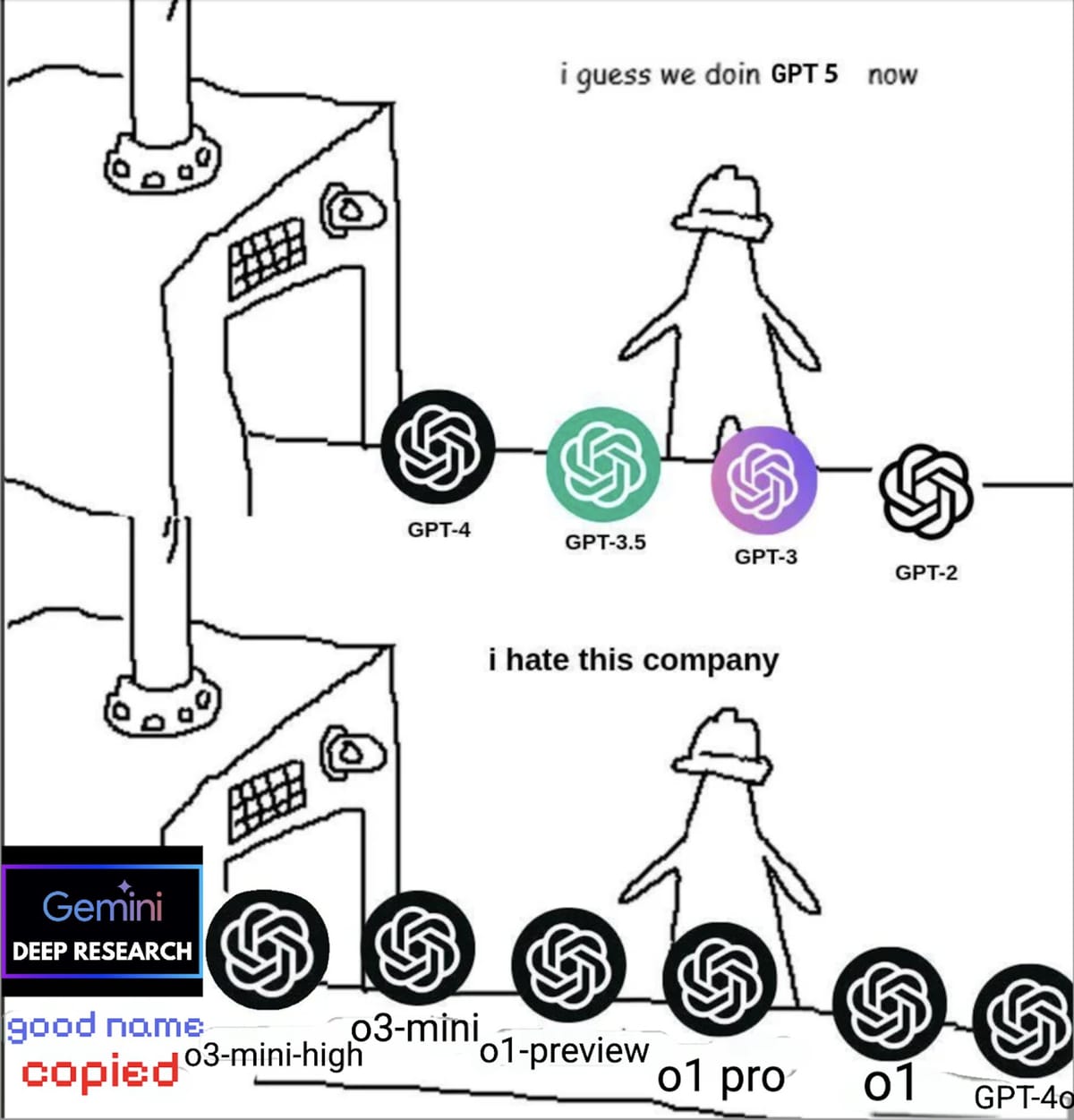

In what may be their worst-named announcement to date, OpenAI has opened access to their o3-mini series of models. In what feels like an increasingly direct response to R1, this is the first time they opened access to a TTC model to free users. Plus and Pro subscribers, meanwhile, also get access to o3-mini-high as well as higher rate limits throughout. At the same time, API access seems like a solid option for many use cases.

While some people seemed preoccupied with generating fun animations and arguing about whether ChatGPT now shows the full reasoning chain (it doesn't, though something may be coming), I am very happy about the overall vibes of the model as well as the giant speed up using it for stuff that required full o1 before.

OpenAI: Deep Research

Late Sunday evening, OpenAI announced their competitor to Google's Deep Research mode. Powered by full fat o3 (not o3-mini, apparently!), this is their next agent offering. The agent goes down a research rabbit hole and searches the web for a few minutes, preparing a final report at the end - even for topics that are far, far beyond the scope of current "SerpAPI + LLM" tools like Perplexity. As an additi0nal tool, it seems that the agent also has access to a Code Interpreter session.

Access will be limited to the Pro tier for now (though we did get an EU launch!) and I'm excited to get my hands on it, as, based on the demos, I can actually see it justifying the $200/month spend for some very specific people in some industries. On the other hand, Altman has talked publically about how they're full planning to bring the tool the Plus and even Free tier later on.

I enjoy how OpenAI has just completely given up on any form of comprehensibility for their naming, just stealing the name from DeepMind. One easteregg suggested calling it "Deeper Seeker", which I love.

Never one to stay out of the limelight, this has led to my personal quote of the week by Sam Altman: "my very approximate vibe is that it can do a single-digit percentage of all economically valuable tasks in the world". While that seems a bit optimistic, what we actually have access to seems like a pretty cool product overall (at least the swath of people that had access to it before the announcement).

However you may swing it, having the model score 25% at humanity's last exam (very metal name, but somewhat inaccurate), right after o3-mini did 13% is cool, though the comparison is very unfair as o3-mini didn't get access to the same list of tools (and HLE test specifically for obscure knowledge, where you would expect this type of agent to excel).

Google: "Ask for me"

In what might be the most gen-z-coded feature release ever, Google now has an experimental feature that has their Duplex assistant (remember I/O 2018?) call a business to find out details on your behalf. I am really trying to hate this (and can definitely see this becoming annoying for businesses), but there is definitely something appealing about this.

We’re testing right now with auto shops and nail salons, to see how AI can help you connect with businesses and get things done. pic.twitter.com/inf5hhj1BS

— Rose Yao (@dozenrose) January 30, 2025

Mistral Small 3

Mistral reminded us this week that they do in fact still exist, announcing a "latency focused" 24B parameter model performing admirably for its size. Even as DeepSeek's rather lazy censorship once again highlights the need for European sovereignty, and the EU pushes forward with open initiatives that seem slightly underfunded at 37 Million Euros, I can't help but wonder: How do they plan to make money with this thing?

Alibaba: Qwen2.5-VL & Qwen2.5-Max

While not being a TTC model definitely hurt its appeal, I am happy to see Alibaba scaling up it's impressive Qwen2.5 model into a "max" variant, outperforming the overwhelming majority of traditional LLMs.

Perhaps more interesting to most people is Qwen2.5-VL, a version of the model tuned for interacting with visuals. I'm especially looking forward to testing some needle-in-the-haystack type benchmarks on its video comprehension, so see how well it stacks up against some of the demos Google has been cooking.

M-A-P YuE

Just as I expected Suno to have effectively cornered the Text-to-Music modality with its truly impressive v4 model released late last year (Udios last release was "folders" over three months ago??), China once again comes to the rescue.

Released by open-source collective "Multimodal Art Projection", YuE is, by quite some margin, the most impressive leap we have seen in open source music generation over the last couple months. While certainly not quite reaching Suno's level of polish, the results are still scary good and better than they have any right to be.

Personally, I am looking forward to generating some Taiwanese independence hymns as soon as I can get a functional demo up and running - let's see!