Parameter Update: 2025-35

"machines!" edition

Given we didn't see a big lab release this week, it's been surprisingly interesting!

GLM-4.5 in Claude Code

Chinese startup Z.ai with their flagship GLM-4.5 has been flying under my radar for a bit as I didn't see anything spectacular or novel about the model worth reporting on. Therefore (and as I've mostly been using Codex CLI recently), I missed their new GLM Coding Plan offer last week, so thanks for pointing it out Atheer! The company offers a drop-in replacement for Claude Sonnet 4, roughly matching performance, for just $6/month (first month discounted to $3!). I've tried it out briefly, and it has mostly felt flawless, with the only downside compared to Anthropics $200/month plan being broader access to Opus, which seems hardly worth it. With that in mind, then, it would seem that (1) the era of subsidised free compute is not coming to an end yet and (2) Anthropic really seems to be taking more than a few losses recently!

Claude: Code Intepreter

Announced as "ability to create and edit files", Simon Willison called this out for what it is: Claude finally has a code interpreter! Combined with MCP and Search, this enables quite a few interesting use cases, most of which appear to be focussed on Excel for now

Claude just replicated my profile pic in an excel file

— Alex Albert (@alexalbert__) September 10, 2025

We're entering the vibe excel era pic.twitter.com/FJllZXyWyQ

Qwen3-Next

Following up on the Qwen3 announcements over the last couple of months (and Qwen-3 Max just last week), Qwen3-Next feels like a much more experimental run, designed to push context length, training, and inference efficiency rather than strictly maximize performance. The result seems very interesting, combining a bunch of new (or, new at this scale) techniques: an extreme MoE split (80B total, 3B active), hybrid gated DeltaNet + gated attention, native multi-token prediction. While I would have liked a bit of a longer write-up, seeing experimentation at this scale being published is still very interesting and I do appreciate a HuggingFace release!

Pretraining Efficiency & Inference Speed pic.twitter.com/nGn0iQrJgi

— Qwen (@Alibaba_Qwen) September 11, 2025

ByteDance Seedream 4.0

Just two weeks after Google officially launched their "nano-banana" image model, it has now got some serious competition! Bytedance (yes, the Tiktok people!)'s new Seedream 4.0 technically beats Google's model (at least on the Artificial Analysis leaderboard), but personally I find the two to very much match on image editing. The real advantage is that Seedream, in my experience, errors out a lot less often, can do up to 4K outputs and outperforms nano-banana on aesthetics for pure text-to-image (which seems like the main weakness of the Google model - hence the "dual availability" of nano-banana and Imagen 4). While their own platform is massively complicated (and slow), availability on Fal seems like a good indicator of things to come.

Thinking Machines

Much has been said about Mira Murati's "Thinking Machines" startup, with its ridiculous $12 billion pre-product valuation. Probably rightfully so. But this week we finally got a slight peek behind the curtain. In their first post on their new "Connectionism" blog (cool name!), they explore the causes and potential fixed for nondeterminism in LLM generations - an issue that feels like it has got worse as reasoning models have emerged, with OpenAI even fully removing the "temperature" parameters. Fully deterministic behaviour feels like a very useful property to start building superintelligence, so good on them for pushing that frontier!

Cursor: new Tab model

Cursor has a new Tab model! I know with a headline like "21% fewer suggestions" it really doesn't sound inspiring, but it I swear it's fascinating. Because: They are, to my knowledge, the first ones doing online RL at this scale. What that means is that the model is mass training based on user behaviour, with new models versions being rolled out every 1.5-2 hours!

We've trained a new Tab model that is now the default in Cursor.

— Cursor (@cursor_ai) September 11, 2025

This model makes 21% fewer suggestions than the previous model while having a 28% higher accept rate for the suggestions it makes.

Learn more about how we improved Tab with online RL. pic.twitter.com/WYTYaynnEI

So far, I haven't felt much of a difference, but I am also very clearly not a power user of the model, so make of that what you will. Either way, my Twitter timeline has been having fun with it (and, asking pointed questions related to training on user data, ability to poison the model,...).

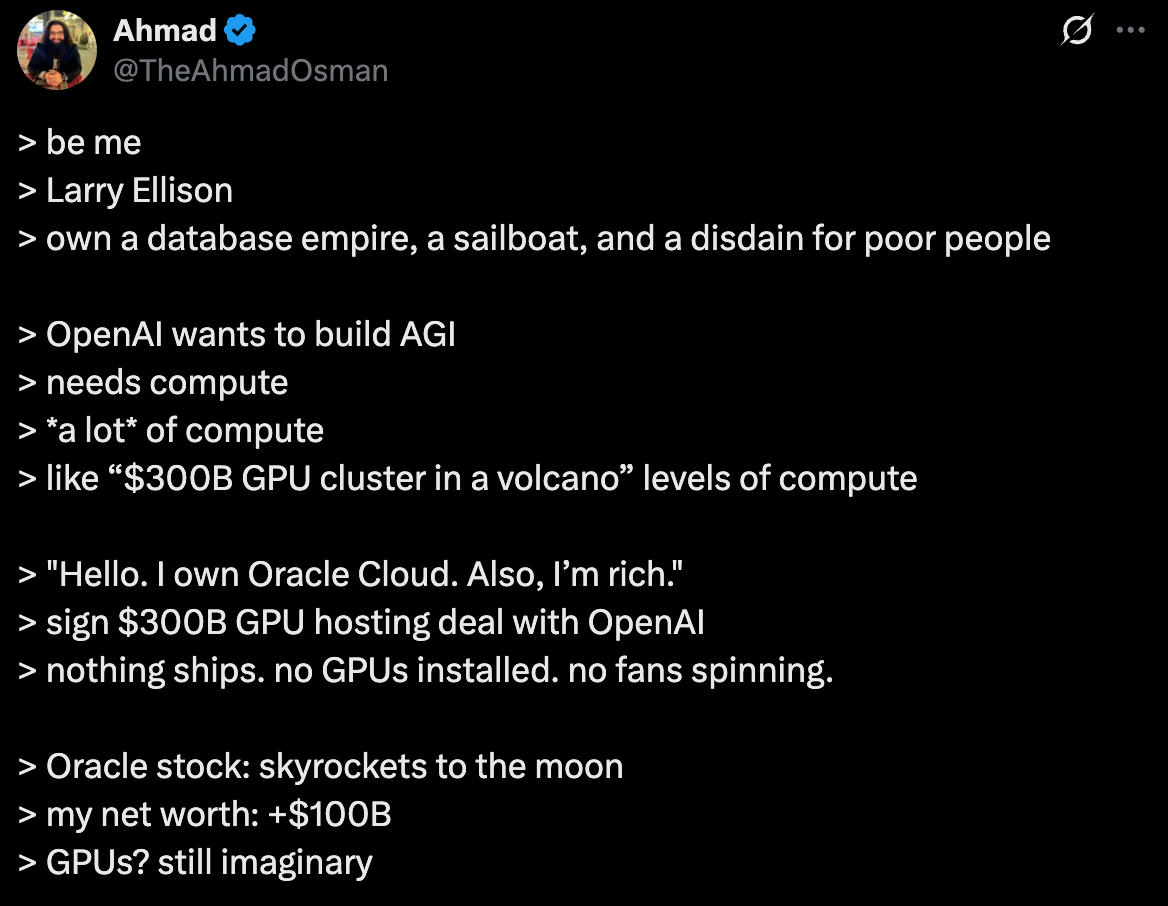

Oracle Valuation Jump

This week, Oracle stock jumped over 30% this week after announcement that they had signed preliminary agreements with multiple large AI companies, causing future revenue from customer contracts to jump over 360%. This did end up making founder Larry Ellison the richest man on earth, which feels a bit circular given that he has invested into a lot of these companies himself.

How money works:

— Yuchen Jin (@Yuchenj_UW) September 12, 2025

1. OpenAI signs $300B GPU deal with Oracle

2. Larry gains $100B (no GPUs shipped)

3. Larry invests in OpenAI’s $1T round

4. Sam uses $300B to pay Oracle

5. Oracle stock pumps again

6. Larry makes another $100B

7. Larry invests in OpenAI

Flywheel go brrr.

Nvidia: China Antitrust

China played a bit of a Uno reverse card today, accusing Nvidia of violating antitrust policy and discouraging the use of certain Nvidia and AMD GPUs for governmental contracts (in favour of local GPUs). Turns out that blocking access through trade blocks and tariffs never really worked, but now they don't actually want US GPUs? Feels like a bit of a dig.

BREAKING: China says Nvidia, $NVDA, has violated anti-monopoly laws after conducting a “preliminary probe.” pic.twitter.com/ILDBZRE5QS

— The Kobeissi Letter (@KobeissiLetter) September 15, 2025