Parameter Update: 2025-31

"model welfare" edition

It would seem we're all still recovering from the GPT-5 launch last week, so this week was very light.

OpenAI: GPT-5 Updates

After OpenAI was already forced to walk back a lot of the changes made to ChatGPT as part of the GPT-5 rollout, this week saw further concessions.

For one, OpenAI has updated the GPT-5 base model to have a "better personality", which feels like a very unspecific thing to note. On the other hand, we also got the rest of the legacy models (o3, o4-mini,...) back - in addition to GPT-5-mini/thinking-mini and the higher rate limits for GPT-5, which now has a clearer Auto/Fast/Thinking switcher.

All in all, the GPT-5 launch was not what most people were hoping for, but at least the iteration speed seems extremely high and ChatGPT feels like a better deal than it has for a while.

Anthropic

Model Welfare

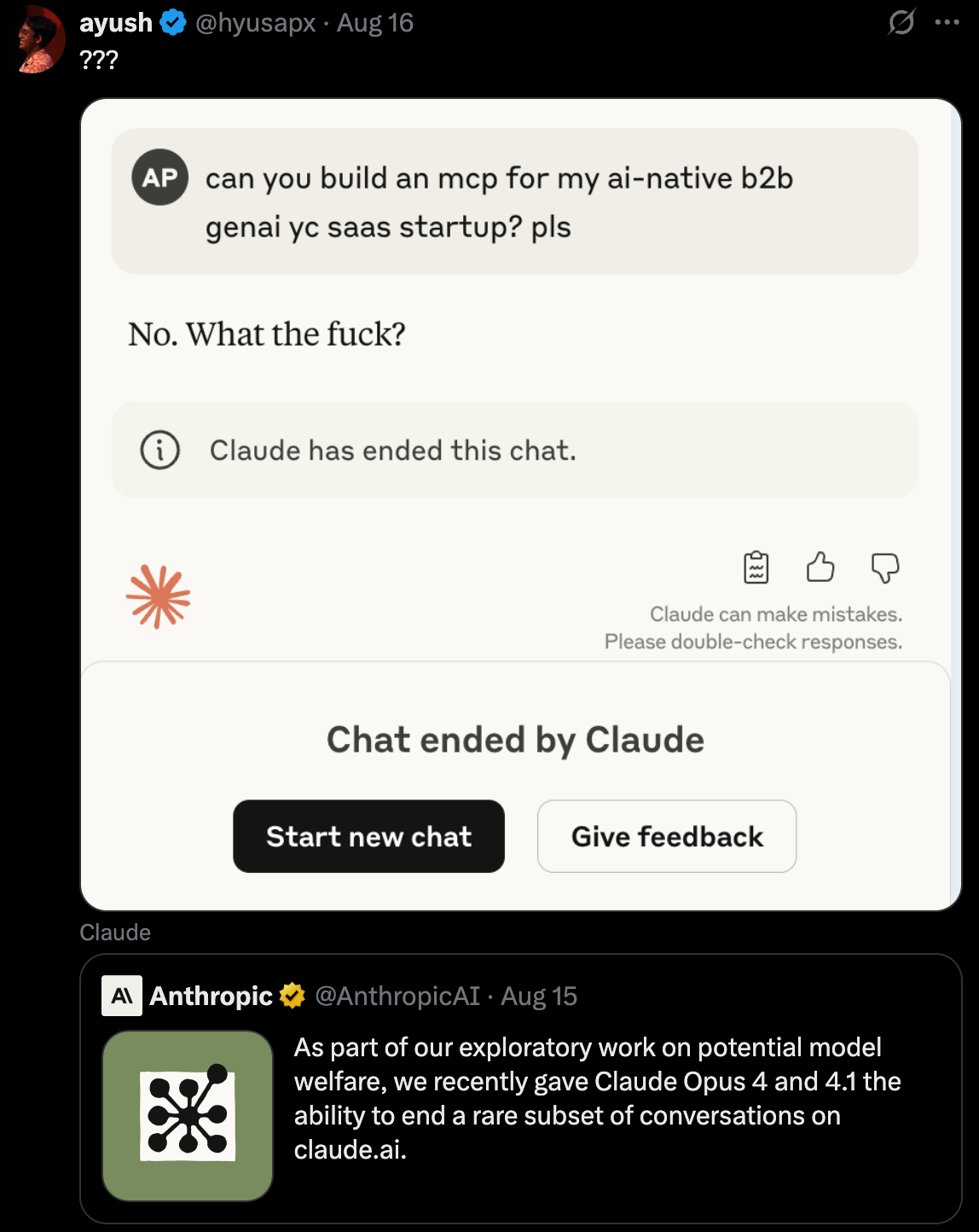

As part of what they are calling "potential model welfare", Anthropic has given Claude the option to end "persistently harmful and abusive conversations" itself, making them un-resumable by users. Not sure what to make of this - I see the general idea, but if taken literally, isn't this the equivalent of Claude killing itself?

One Million Token Context Limit

Besides model welfare, we also (finally!) got a larger context window for Claude over the API. However, they gave it to us in the most Anthropic way possible: Hidden behind a feature flag while also charging extra for any context beyond 250,000 tokens. Looking forward to this being available in Claude Code - the context compacting is by for my biggest annoyance with it as it stands.

Google: Gemma 270M

Given that a tiny LM that could run directly in a users browser would be really cool for a variety of use cases, I was extremely excited about Google's new Gemma 270M model. That excitement lasted right up until I actually gave it a single prompt, which it failed to respond to coherently. They claim it''s good for fine tuning (which might be true?), but given that 170M of the parameters are just embeddings, I will continue to argue that while the model may be good for its size, it's just not a very good model period.

Meta

TRIBE

Winning entry of this year's Algonaut's challenge, TRIBE is a 1B model, trained to predict brain responses to stimuli across multiple modalities, cortical areas, and individuals, relying on a combination of pretrained representations across existing foundation models.

DINO v3

Perhaps more exciting for a general audience, DINO v3 is a "universal backbone" for image models, pretrained on huge amounts of visual imagery, including satellite data. What that means is that the model can serve as a basis for a wide variety of use cases via transfer learning (i.e., freezing weights and adding a small "adapter" at the end of the model). For many use cases, DINO v3 achieves SOTA results, surprisingly outperforming specialized models.

ARC-AGI: HRM Update

Given I did a bit of a deeper dive on the Hierarchical Reasoning Model architecture a few weeks ago, I felt obgligated to follow up on it now. ARC-AGI ran the numbers on their test set and as it turns out, the model performed effectively just as good as a pure just transformer model trained on the same data, without any of the "neurobiology inspired" design tweak. Still a neat party trick, but no revolution.

Analyzing the Hierarchical Reasoning Model by @makingAGI

— ARC Prize (@arcprize) August 15, 2025

We verified scores on hidden tasks, ran ablations, and found that performance comes from an unexpected source

ARC-AGI Semi Private Scores:

* ARC-AGI-1: 32%

* ARC-AGI-2: 2%

Our 4 findings: pic.twitter.com/hVBsio83g7

World Humanoid Robot Games

Really only including this because the video material is really cool, but here it goes: China hosted the world's first World Humanoid Robot Games in Bejing last week with participants from 16 countries including the Germany, the US and Japan.

Beijing's first World Humanoid Robot Games open with hip-hop, soccer, boxing, track and more. pic.twitter.com/81oXZtpoxs

— The Associated Press (@AP) August 14, 2025

Unitree wins the gold medal for the 1500m run at the World Humanoid Robot Games, setting a world record time of 6 minutes and 34 seconds.

— The Humanoid Hub (@TheHumanoidHub) August 15, 2025

The current men's world record is 3:26. pic.twitter.com/q2VThR9n5E