Parameter Update: 2025-30

"chart crime" edition

Ladies and gentlemen, it's a big one!

OpenAI

GPT-5

Two and a half years after the original GPT-4 announcement, after months of leaks, vagueposting and speculation, we finally got GPT-5 this week. And, as it stands right now, people are primarily mad and disappointed. As usual though, there is some nuance to be had here.

First off, what did we get? In total, there are six new models, served through a unified router:

- GPT-5: The normie one. Non-reasoning, new standard model, replacing GPT-4o. Much more capable, but still definitely not a reasoning model.

- GPT-5 Thinking: The big boy. Replacing o3 while being slightly more capable but much nicer to talk to. This is the one you want.

- GPT-5-Thinking-Pro: The big, big boy. replacing o3-pro. Only available to Pro subscribers (not even via API) and therefore, very pricey.

- GPT-5-mini: Not available in ChatGPT just yet. Replacement for 4o-mini - will be interesting to see if it is as much of a downgrade as 4o->4o-mini is.

- GPT-5-thinking-mini: o4-mini replacement. Also not available in ChatGPT yet, but might make loosing o3 more bearable if it's good.

- GPT-5-thinking-nano: API only, advertised as a replacement for GPT-4.1-nano (and extremely cheap!). Haven't seen too many benchmarks yet, but promising if it's not shit.

The disappointment on my timeline is partly because each of the models is only slightly better than what we had before, often in some hard to measure ways (e.g., it remains extremely challenging to quantify the improvements in writing quality - though I was positively surprised, at least by the thinking model!). On the other hand, it also feels undeniable that (at least initially) Plus users got the short end of the stick - loosing their 2200 guaranteed reasoning messages per week in exchange for just 200/week to GPT-5-thinking. They also get 80 messages every three hours to regular GPT-5, but those will only reason if you beg for it, which seems bad. It almost seemed inevitable, then, for OpenAI to un-screw users by raising the limit to 3000 reasoning messages per week (which, to be clear, is a much better deal than before!). In stark contrast to this back-and-forth sit the updated API prices, which are just genuinely very good.

It also felt like there was a whole lot of awkwardness around the launch. The hour-long livestream felt stilted, with hilariously broken charts, drawn-out demos and an energy I saw someone describe as "a funeral hosted by minimalists". Making things worse, the model router was broken directly after launch, making the system seem much dumber than it is (there's some interesting conspiracies around it!) and they decided to immediately deprecate all old models, leading to a surprisingly huge backlash as tons of emotionally dependent people on Reddit begged for their friend back (genuinely did not see this coming!). This isn't meant to take away from the launch. This week, close to a Billion people got a huge model upgrade (remember that free users we're mostly stuck on GPT-4o until now), and any rollout at this scale will always be hard. OpenAI has since made some improvements: Temporarily(!) increased rate limits for Plus users, more transparency on which model is being used and giving people GPT-4o back. That being said, between the disappointing model improvements, poor presentation, paid app colour unlocks (??) and the miserable handling of the launch, OpenAI appears to be caught on the back foot for the first time in a while. The next couple weeks will be interesting.

GPT-oss

While GPT-5 unsurprisingly dominated my timeline this week, we also finally got OpenAI's long-awaited open source model this week. And, as it turns out, there are two of them! Coming in both 20B and 120B variants, gpt-oss claims to be somewhere between o3-mini and o3 in terms of pure benchmark performance, while fitting on your laptop or single GPU respectively.

After playing around with the models for a bit, I have, once again, mixed opinions. On the one hand, the models are among the smartest open source we have seen to date. I'm also very glad that they released them under Apache license (not sure what that "small addition" was meant to accomplish, though). On the other hand, their multilingual performance is atrocious (in my experience: worse than Llama2!), the model's factuality is generally very low and it's just not very fun to chat with.

It would seem, then, that OpenAI's business plan of "open source o3 on Tuesday to make everyone else's offerings worthless, then GPT-5 on Thursday so we stay ahead" failed to two fronts. First, their open source model is markedly worse than o3 in real-world use. Secondly, GPT-5 is not a significant upgrade in the majority of use cases. The whole thing is a bit sad, really.

Genie 3

In what appears to be a throwback to this extremely specific meme:

My god they've actually done it https://t.co/MzRIhEz3AZ pic.twitter.com/89NSPZMJNf

— Jason Ganz (@jasnonaz) August 5, 2025

Demis & team gave us Genie 3, the best world model to date, this week. As far as I can tell, the rate of progress here is insane (remember the Decart Minecraft demo?) and the whole thing makes for some extremely impressive (albeit very specific) demos.

If you haven't played around with the demo website yet, I would encourage you to at least give the "promptable events" portion a shot.

Anthropic: Claude Opus 4.1

Determined to not let OpenAI get _all_ the thunder this week, Anthropic gave us a very minor Claude Opus upgrade titled 4.1 (finally a good name!).

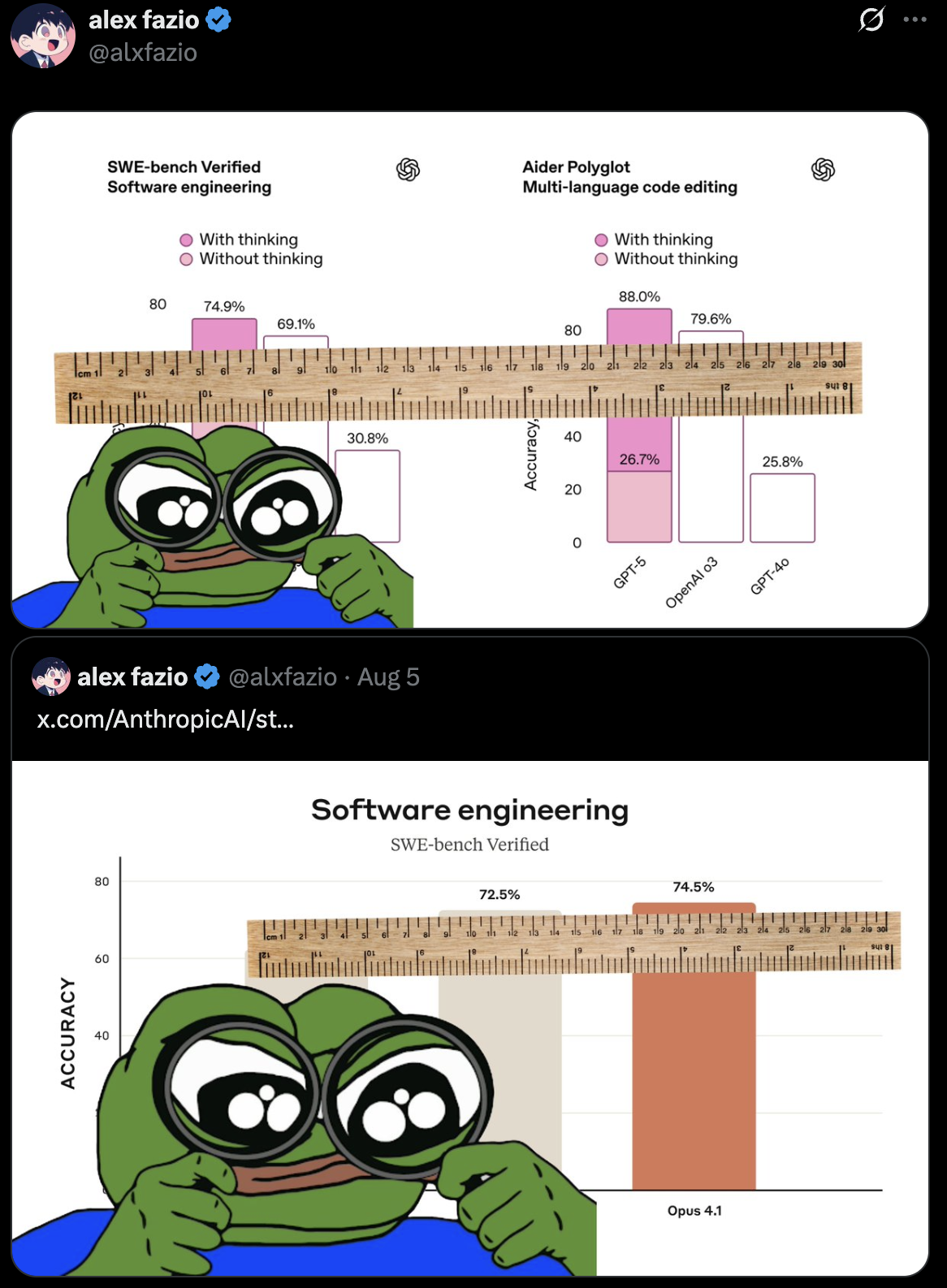

While I've seen some people complain about the lack of error bars in the announcement (as Anthropic has written an entire blog post about this exact thing in the past), this is certainly not the worst chart crime of the week, so I'll just take the free 2% performance improvement. Also, I like them being good sports about the small (but free!) gains compared to whatever fuckery OpenAI is up to at the moment.

Ridiculous that OpenAI claimed 74.9% on SWE-Bench just to prove they were above Opus 4.1’s 74.5%…

— Deedy (@deedydas) August 8, 2025

By running it on 477 problems instead of the full 500.

Their system card only says 74% too. pic.twitter.com/jAHwhZXyEB

Other launches

ElevenLabs: Eleven Music

Certainly overshadowed by the other stuff we got this week, but ElevenLabs dropped their Suno competitor this week. It's a bit on the pricey side (and prompting input is a bit more limited), but the results are really nice!

Introducing Eleven Music. The highest quality AI music model.

— ElevenLabs (@elevenlabsio) August 5, 2025

- Complete control over genre, style, and structure

- Multi-lingual, including English, Spanish, German, Japanese and more

- Edit the sound and lyrics of individual sections or the whole song pic.twitter.com/PmSOeoFe0G

Alibaba: Qwen Image

Also under the radar, Alibaba gave us their new image model. While some of the outputs feel squarely in the uncanny valley, the text precision is incredible for a model without any autoregressive/separate-text-handling components! Not sure what suitable use cases for it may look like, but still: neat!

🚀 Meet Qwen-Image — a 20B MMDiT model for next-gen text-to-image generation. Especially strong at creating stunning graphic posters with native text. Now open-source.

— Qwen (@Alibaba_Qwen) August 4, 2025

🔍 Key Highlights:

🔹 SOTA text rendering — rivals GPT-4o in English, best-in-class for Chinese

🔹 In-pixel… pic.twitter.com/zT9CFLzWkV

Ideogram Character

Technically already a week old, but since it flew under my radar last week: Ideogram is back from the brink of irrelevancy, and they brought a very impressive model with them! We've seen the promise of single-image character cloning/consistency before, but this is the first time I tried it with an image of myself and didn't immediately dismiss it afterwards! As in: It's still obviously not me, but it's certainly a lot closer than any other model has got so far!

In other news

Cloudflare Perplexity Drama

According to Cloudflare (which just a few weeks ago started blocking AI crawlers by default!), Perplexity has been caught trying to circumvent these blocks with some very creative tactics. Perplexity's defence is that the crawling here is user-driven (i.e., happens once the user searches, not to build an index in advance) which may not actually be a terrible argument?

Perplexity is repeatedly modifying their user agent and changing IPs and ASNs to hide their crawling activity, in direct conflict with explicit no-crawl preferences expressed by websites. https://t.co/yToVAmwcwn

— Cloudflare (@Cloudflare) August 4, 2025

Windsurf

If you thought the Windsurf drama was finally settled with the acquisition of the brand name and rest of the team by Devin-developer Cognition, this week proved you wrong. Last week, Windsurf fired 60 of the people they just acquihired and is now offering buyouts to the rest. Not sure what went down here? It seems unlikely that Cognition was primarily interested in the brand name? On the other hand, I am not sure why they were expecting exceptional talent after Google already took senior leadership and whoever else they felt like. Still sucks for the people affected though - must have been a wild couple weeks for them.