Parameter Update: 2025-22

"liquid glass" edition

Apple: WWDC 2025

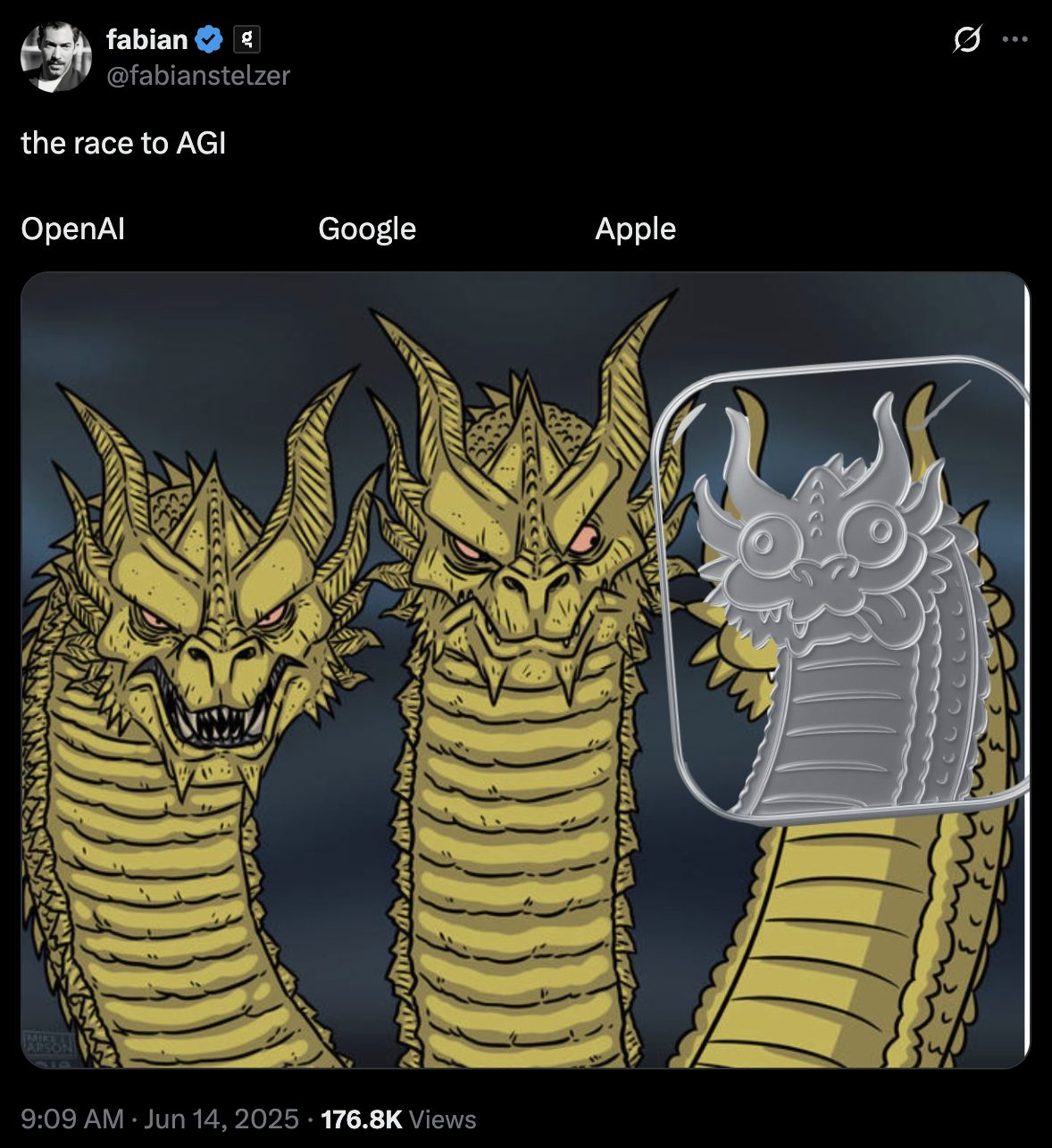

After most of us spend the last ~year making fun of Apple for their AI fumbles, and things didn't seem to look up (at least according to their recent research output), I was not expecting much of WWDC this week.

And popular sentiment on my timeline seems to indicate that we got just that - much ado about nothing (and very mixed opinions on the Liquid Glass thing - I personally really like it!). But I feel the need to disagree for once. While AI Siri was notably absent (only being briefly mentioned at the start), it did feel like Apple shipped everything but the main dish - in what was a much more cohesive offering than last year, at least for developers:

- Live Translation in a bunch of places

- Call screening, hold assist,…

- Developers get access to their on-device models and loads of new APIs

- Visual intelligence works on Screenshots (and is also extensible by developers!)

- AI-powered Shortcuts (composable with a revamped Spotlight and the Intents API)

Instead of building yet another chatbot, Apple aims to position AI in a way that is seamless, personal and deeply integrated. And while others are shipping things that could grow into something cool one day, Apple is mostly doing that for developer (while telling end users to wait just a little longer). With this in mind, many of the non-AI announcements we got “pay into” the larger Apple Intelligence feature bucket. I’s very clever, actually - it’s just a shame you needed to watch an hour long interview instead of just them explain things in the main presentation.

Mistral: Magistral

It feels like Mistral once again made a release solely to make me eat my own words, while still barely clinging to competitiveness. After bemoaning the lack of a new large model and/or reasoning model from the European AI company, this week we got Magistral, their first reasoning model, still based on their Medium 3 base model, in two sizes:

- Magistral Medium, only available through their API, roughly competitive with DeepSeek R1 (the original, not the "-0528" variant that matches o3)

- Magistral Small, released fully Open Source, competitive with R1 distillations of similar size

While I am extremely happy to see Europe compete (and reasoning in multiple languages is a neat differentiator!), it does feel like Mistral arrived to the party a bit late here. Given their recent plays, it's mostly unsurprising to see the larger model variant remain closed for now, though at least their API pricing remains competitive with the o3 price cuts (while still offering a worse model overall, so idk). The most exciting thing about this release, then, is probably the in-depth technical report that really seems like a good read.

OpenAI

In a move I somewhat expected, OpenAI has cut the API pricing for their o3 model! What I didn't expect: they did so by a rather dramatic 80% while also finally giving us o3-pro. That means that GPT-4o is now more expensive than o3 on the API (and is not actually listed as a recommended model on there any more - not sure what's up with that?), while o3-pro is twice the price o3 was before. While this may seem like an odd move in isolation, it seems pretty likely to be motivated by the updated DeepSeek R1 variant (thanks, China!).

As for o3-pro: If you can stomach the price and response time, it seems to be the best model out there. I saw some speculation about it being just 10x o3 queries with majority voting, which would make sense given it is 10x the price, but subjectively the outputs do seem better than that would probably give? Either way, I am not usually open to waiting 10+ minutes, so I think I will wait until this type of performance filters down a bit.

Bytedance

If you were wondering if Bytedance was still building video generation models: Of course they are! And with Seaweed APT2, interactive TikTok seems closer than ever. The model is capable of real-time video generation at 24FPS on 8xH100 (that may seem high, but is also orders of magnitude faster than models just a few months ago!) and - and this is really cool - the output is steerable in real time, meaning they can effectively rig a virtual avatar.

Bytedance first to the holodeck with a steerable real time video generation world model. Up to a minute long videos 24 fps in HD resolution on 8xH100s.

— Prakash (Ate-a-Pi) (@8teAPi) June 12, 2025

It's only an 8 billion param model... pic.twitter.com/oDEy0B6Jqe

Meta: Accidentally shared chats

In other news: Facebook is involved in another privacy scandal! I know, the horrors! How unexpected!

Anyway, this time it seems to only be somewhat their fault, as loads of less savy users publish their Meta AI chats on the apps social feed by accident. Now, on the one hand, you could rightfully blame them for not understanding that a "Share" button would indeed... share? their chat. On the other hand, I would ask why the chatbot app needs a social feed at all? idk, seems bad.

Wild things are happening on Meta’s AI app.

— Justine Moore (@venturetwins) June 11, 2025

The feed is almost entirely boomers who seem to have no idea their conversations with the chatbot are posted publicly.

They get pretty personal (see second pic, which I anonymized). pic.twitter.com/0Hoff1psPU