Parameter Update: 2025-21

"[laughs]" edition

TTS Wars

I will admit that if there is an area of consumer AI I've not paid enough attention to over the last little bit, it is probably the rise of hyperrealistic text-to-speech models. This was exemplified by two players last week:

Bland put out a demo that clones a speaker’s voice so faithfully almost made up for some of the worst lip-syncing I've ever seen. ElevenLabs, meanwhile, has put out an alpha version of their V3 model, which lets you drop stage-directions like [whispers] or [laughs] directly into the text prompt and renders them in 70-plus languages. Honestly scared for my parent (and myself) once scammers start to realize these things exist.

Today we’re excited to introduce Bland TTS, the first voice AI to cross the uncanny valley.

— Bland (@usebland) June 4, 2025

Several months ago, our team solved one-shot style transfer of human speech. That means, from a single, brief MP3, you can clone any voice or remix another clone’s style (tone, cadence,… pic.twitter.com/zD0ZNEowL5

What are the best generations you've heard with the new Eleven v3 (alpha) model?

— ElevenLabs (@elevenlabsio) June 6, 2025

Here are a few from the team.

First: an AI that actually laughs.pic.twitter.com/tb9lltFgy5

Cursor

After soaring past $100 Million ARR just a few months ago, Anysphere (Cursor) has now reached $500M ARR - and, based on that number, raised $900M at an eye-watering $9.9 Billion valuation. Between snorting lines of coke and other questionable business expenses (if Cluely's marketing stunts are anything to go by), they also used the occasion to finally release Cursor 1.0 with another slew of updates, mostly focussed on moving away from being "just a VSCode fork" (Background Agent, BugBot) and integrating further with the ecosystem (one-click MCP install).

OpenAI

Business Update

This week, OpenAI took a break from building AGIT to give us... a business tooling update! (exciting, right?)

Anyway, some of the stuff they showed is actually cooler than it may initially sound. I was really excited about MCP support before I realized it is currently both limited to Deep Research (boo!), not available in the EU (double boo!) and seemingly fundamentally broken (lol).

The cooler integration, then, seems to be "Record Mode" (ChatGPT can now transcribe and summarize meetings) and their prebuild data connectors. I remain sceptical that the latter actually work on real-world data without significant tuning, but am also optimistic that they will help their enterprise sales skyrocket.

Advanced Voice Mode Update

It's a lot better now - feels more like Sesame's legendarily weird Maya demo than the previous iteration. Some people also got it to produce fictional ads, which is one of the weirder hallucinations I've seen.

New York Times Lawsuit

Due to the ongoing lawsuit between The New York Times and OpenAI, the latter is now forced to keep a record of all requests made to their models for 30 days, including their API and even "Temporary Chat" mode.

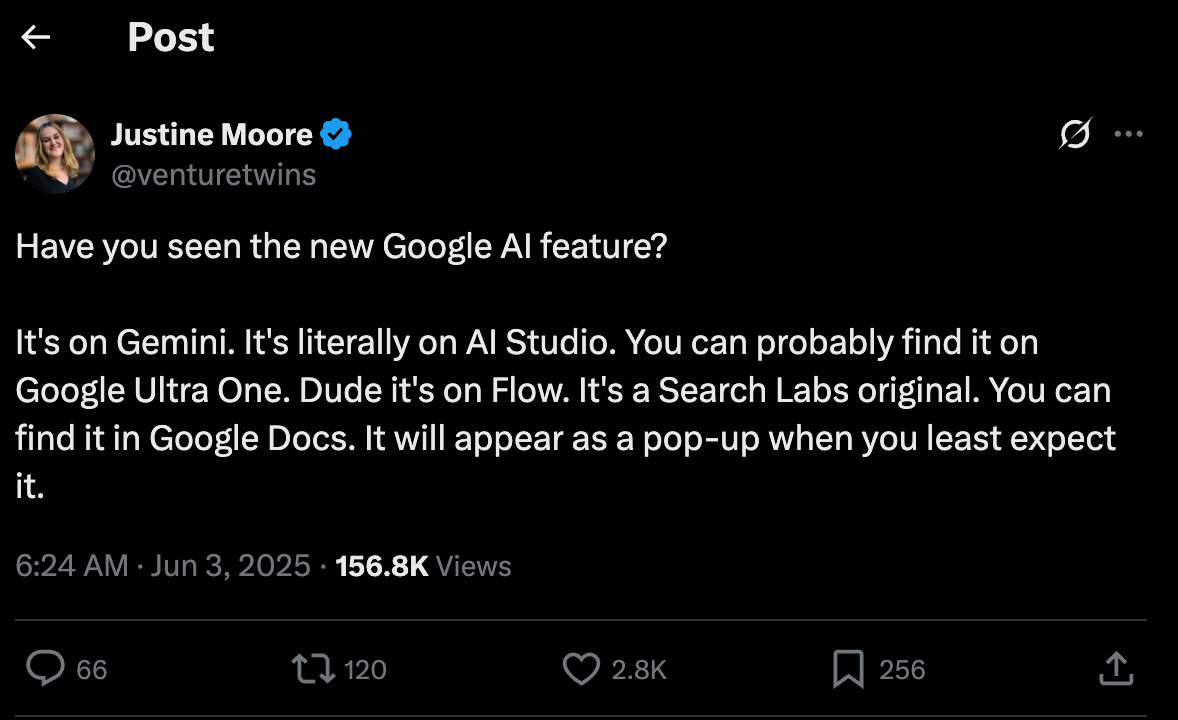

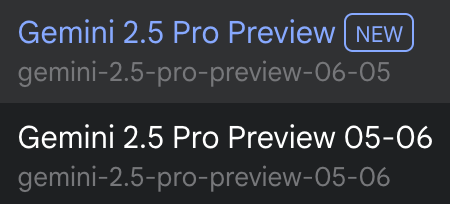

Once again competing for the worst name yet, Google has released their umpteenth iteration of Gemini 2.5 Pro - officialy called gemini-2.5-pro-preview-06-05 following in the footsteps of gemini-2.5-pro-preview-05-06.

Fortunately, it seems that this release is actually good and is the release candidate that may make it to production (finally!).

Our latest Gemini 2.5 Pro update is now in preview.

— Sundar Pichai (@sundarpichai) June 5, 2025

It’s better at coding, reasoning, science + math, shows improved performance across key benchmarks (AIDER Polyglot, GPQA, HLE to name a few), and leads @lmarena_ai with a 24pt Elo score jump since the previous version.

We also… pic.twitter.com/SVjdQ2k1tJ

In other news, Veo 3 now has a much cheaper "Turbo" mode that seems ideal for tuning prompts before committing to a run on the full model - I'm still waiting for cheaper access overall, but this seems like a very major step in the right direction!

Apple

While we are all waiting for WWDC later today, Apple has already been making some headlines last week by publishing a paper that claims to discredit "LLM reasoning" as a whole. While my timeline has been filled with discussion, it seems that, no matter what your take is, there are some flaws with their methodology that may need to be addressed. Let's hope this is not a sign of things to come for the company that mostly seemed AImless when it came to AI in recent months.