Parameter Update: 2025-08

"robots!" edition

xAI: Grok 3

After predicting a load of announcements last week, it turned out we got exactly one of them - Grok. And in one word, this one is weird.

The Good:

- Initial benchmarks turned out to be really impressive - a lot closer to the "next gen" model I expected Gemini 2.0 Pro to be.

- Not sure if it is also new, but this is the first time I used the Grok website and it feels insanely polished - even compared to ChatGPT. I also love their way of showing chain of thought.

- The team has promised improvements "almost every day" (though I am not sure if having everyone in the office until midnight the day before launch is a good indicator for sustainable work-life-balance or time management)

- Model access is free (for now?) with what appears to be pretty generous rate limits.

The Bad:

- "Real world" coding performance (or at least the ball in cube demo everyone loves doing) seems to be... not great. That has also been my personal experience.

- I would've appreciated some more clarity in their product communication - what is the difference between "Thinking" and "Big Brain Mode" except that the latter takes longer and sounds extremely cringe?

- While also very funny, it's slightly troubling that it seems you can get around most safety tuning by negging Grok?

The Ugly

- While some of the benchmarks appear a bit spotty, it's been interesting to witness the vibe shift this launch has caused, with some pretty petty beef involving OpenAI staff also not on their best behavior.

- As the base model might be the most left-leaning we've seen yet (which is supremely hilarious coming from the tech bros paid by Musk), the team has resorted to very dumb and frankly embarrassing moves like hardcoding Pro-Trump/Musk-Bias directly into the system prompt. After getting caught, their response was also supremely embarrasing.

As it stands, Grok 3 is fascinating. It's extremely impressive and, frankly, better than I was expecting. The UX feels polished and I've gotten some deeply impressive results from the model. At the same time, there are some rather obvious shortcomings (where's the API? Do they expect anyone to use any of this when they continue to hardcode propaganda into the system prompt?) and, similarly to Gemini 2.0 Pro, anyone looking for a step-function increase in capabilities will be disappointed yet again.

Figure: Helix

Somewhat surprisingly, the past week has been packed with robotics-related announcements and demos. Most of these are only somewhat AI-related, but they are all 😎very cool😎, so I'm keeping them in.

The headliner

Last week, Figure announced Helix, their next-gen "vision-language-action model". While I would have loved more technical details (and the "scaling law" graph from the blog post feels ridiculous), this is our clearest look yet at why they very publically broke up with OpenAI just a few weeks ago. On the plus side, we got an impressive demo video - and a nice diagram explaining how they are splitting world understanding between a two differently sized models, similar to the concept of System 1 and System 2 thinking in humans.

The System 1 model is just 80M parameters in size and runs at 200Hz, while the System 2 model is a more sizable 7B pretrained VLM running at a (still very impressive) 7-9Hz. The special sauce, then, lies in the integration of the two, allowing latent representations from the smarter but slower model to influence the outputs of the fast model. The fact that they can scale this across two robots in the same model also feels like a pretty major step.

The rest

While less technically interesting, here are some of the other robot announcements that caught my eye last week:

The dancing Unitree G1 video was so awesome, I was actually convinced it was fully AI until the CEO followed it up with another one. Now I only believe it's staged extremely well.

Unitree G1 mobility is progressing at a shocking speed.

— Jen Zhu (@jenzhuscott) February 14, 2025

With the cost reduction being the founder’s key KPI, robotics ownership will become a norm soon. The future is coming at us faaaaaaassssssstttttt 🤯 pic.twitter.com/qZlXpHu4Dw

Clone's "Protoclone" looks terrifying enough by itself, but for some reason they insist on filming it in a barely lit environment with intense apocalyptic music playing in the background? - who runs their twitter?

Protoclone, the world's first bipedal, musculoskeletal android. pic.twitter.com/oIV1yaMSyE

— Clone (@clonerobotics) February 19, 2025

Finally, 1X announced Neo Gamma in a video that feels like an intentional antithesis to Clone while feeling extremely hollow and even less realistic than what Unitree is showing?

Introducing NEO Gamma.

— 1X (@1x_tech) February 21, 2025

Another step closer to home. pic.twitter.com/Fiu2ohbIiP

Stepfun

Just as we were starting to recover from DeepSeek, it seems that China's AI progress is not slowing down any time soon. This week, we've seen some cool drops from a lab I personally never heard from before: Stepfun AI. Founded in April 2023, these guys have been quite busy shipping for a while now, though this is the first time they've garnered notable attention outside China. There are two models of theirs to talk about:

Step-Audio-Chat

The first of the two is Step-Audio-Chat, a 130B Audio-to-Audio model (which is a bit of an underserved niche in the open source space). That being said, it handily beats the competition is most benchmarks, though my tries to get it to perform some German music failed pretty spectacularly.

Step-Video-T2V

The second, in my opinion more interesting, model is Step-Video-T2V - a competitor to closed-source video models like Sora or the just-launched Veo 2 (which seems mostly noteworthy for it's impressively large price tag). Looking at the demo below, I am starting to realize that benchmarking video models seems to be even harder than it is for text models - who is working on this?

Politics

Thinking Machines

Since Mira Murati left OpenAI in September last year, I've been waiting to see what she's cooking. Well, now we know... something? Thinking Machines will be an "artificial intelligence research and product company" that has managed to acquire some pretty intense talent. Excited to see how quickly they can start to execute!

Satya Nadella

Dwarkesh's had Satya Nadella on his podcast this week. The entire thing is worth a listen, but notably included some pretty significant vibe shifts. After pushing for more and more capex last year, Nadella is now a lot more careful in his wording and promises. I am expecting at least some of that being related to OpenAI shifting their compute away from them?

Humane

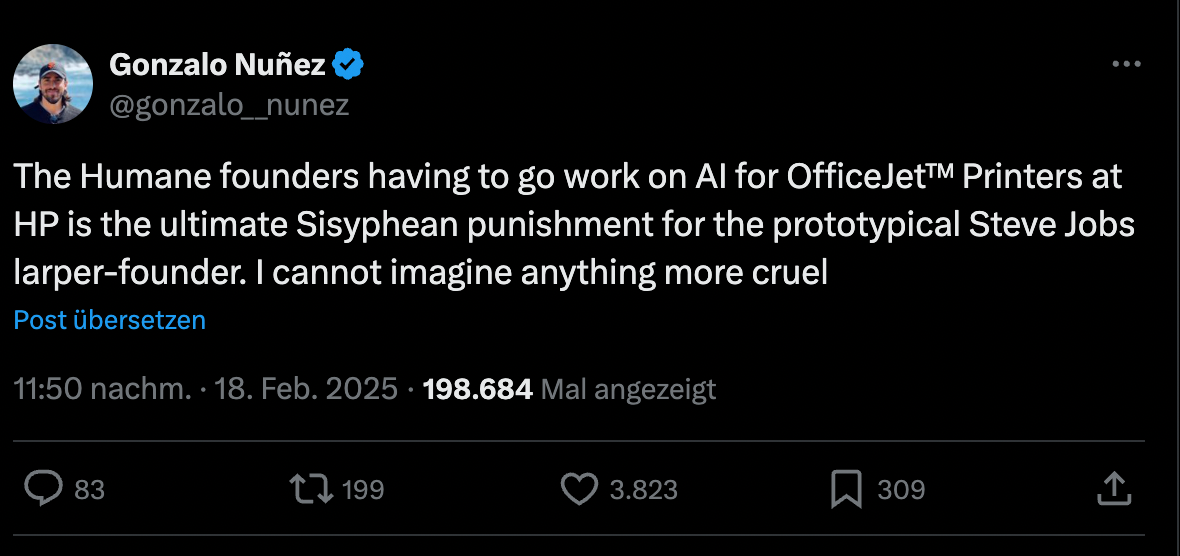

Not sure where else to put it this, but didn't want to leave it out. Humane is dead. On the one hand, this sucks for the dozens of customers that were still using their AI pin. On the other hand, it has lead to some pretty great memes.

imagine your company eats shit so hard you are doomed to work at the HP printer division. Didn't even know that was a possible outcome

— Daniel (@growing_daniel) February 19, 2025

Personally, I am genuinely surprise about them not failing a lot harder after their initial launch. Sure, $116 million is pretty far from their peak valuation of $850 million, but with employees keeping their jobs for now, this actually seems like a pretty okay outcome?

Phind

While I'd heard of (and even used) Phind before, I would've completely missed them soft-relaunching their entire product if my colleague Florian hadn't told me about it (thanks!). After reading through their very detailed blog post and trying the new search myself, this feels in many ways more polished than the equivalent products offered by major players like Perplexity. I can't believe they're building this with a total of four people. Hat's off!

Research

DeepSeek: Native Sparse Attention

DeepSeek seems to have missed the note about not comparing your attention alternatives to the real deal (because you'll lose dramatically) and has developed something.. better? Not just more computationally effective, but just actually getting better result? Also check out the paper, it's really cool.

Google: Co-Scientist

Google claims to have developed an AI system assisting scientific discovery. I didn't look too much into it, as it seemed pretty fluffy, but maybe a cool read?

Microsoft: OmniParser v2 & Muse

In my bookmarks this week I had two Microsoft announcements. The first one is OmniParser-2, a screen parser that allows regular LLMs to interact with UIs.

The other one is Muse - an AI that generates "minutes of AI "gameplay"". Honestly, this seems technically really cool, but I still don't see the endgame here?